Just read How Apple is giving design a bad name and found it thoroughly thought provoking. Maybe I have finally found that subject for a Masters after all.

WPF: Re-creating VS2012 window glow

WPF is such a powerful technology that I’m reminded of the Perl mantra, there’s more than one way to do it. So rather than just giving you some code, this post is also about explaining why I’ve used several WPF techniques so that you can find some reuse for them too.

Window Glow

It seems such an easy thing to do at first, just to add a bit of outer glow chrome around the window and highlight your window amongst the stark simplicity of modern windows. The most naive implementation of this would be to simply building up some glow around a single pixel of colour. In fact this (very bad) piece of code below does exactly that, just by layering several borders;

<Window x:Class="VS2012OuterGlowArticle.MainWindow" xmlns=http://schemas.microsoft.com/winfx/2006/xaml/presentation xmlns:x=http://schemas.microsoft.com/winfx/2006/xaml Title="MainWindow" Height="350" Width="525"> <Border Padding="1" CornerRadius="8"> <Border.Background> <SolidColorBrush Color="DarkOrange" Opacity="0.06"/> </Border.Background> <Border Padding="1" CornerRadius="8"> <Border.Background> <SolidColorBrush Color="DarkOrange" Opacity="0.06"/> </Border.Background> <Border Padding="1" CornerRadius="8"> <Border.Background> <SolidColorBrush Color="DarkOrange" Opacity="0.06"/> </Border.Background> <Border Padding="1" CornerRadius="8"> <Border.Background> <SolidColorBrush Color="DarkOrange" Opacity="0.06"/> </Border.Background> <Border Padding="1"> <Border.Background> <SolidColorBrush Color="DarkOrange" Opacity="0.06"/> </Border.Background> <Border BorderBrush="DarkOrange" BorderThickness="1" Background="White"/> </Border> </Border> </Border> </Border> </Border> </Window>

Obviously there are a few flaws with this, but it does highlight exactly why I think WPF is a great advance over WinFoms. The primitives that we can build from in WPF are very flexible and can be used to create fabulous effects when we don’t know another way to do it.

Effects

But WPF also provides some great built in effects and one of these makes our life much simpler. We can use the DropShadowEffect without any ShadowDepth to give us an outer glow with far less code.

<Window x:Class="VS2012OuterGlowArticle.MainWindow" xmlns=http://schemas.microsoft.com/winfx/2006/xaml/presentation xmlns:x=http://schemas.microsoft.com/winfx/2006/xaml Title="MainWindow" Height="100" Width="200" > <Border BorderBrush="DarkOrange" BorderThickness="1" Background="White" Margin="5"> <Border.Effect> <DropShadowEffect ShadowDepth="0" BlurRadius="5" Color="DarkOrange"/> </Border.Effect> </Border> </Window>

Outside not inside

Now you might be wondering why I haven’t tried to put the outer glow on the outside of the window so far, well there is a very simple reason, windows even under the post Vista world, still have hard region limits. If we add the effect to the window it attempts to draw outside of the region, and simply gets clipped.

<Window x:Class="VS2012OuterGlowArticle.MainWindow" xmlns=http://schemas.microsoft.com/winfx/2006/xaml/presentation xmlns:x=http://schemas.microsoft.com/winfx/2006/xaml Title="MainWindow" Height="350" Width="525"> <Window.Effect> <!-- This doesn't work, shame--> <DropShadowEffect ShadowDepth="0" BlurRadius="5" Color="DarkOrange"/> </Window.Effect> <Grid> </Grid> </Window>

Hmmm so maybe that’s why…

So at this point, we can see that we are going to need to provide the transparency inside the region of our window. Maybe that’s why the VS2012 window is drawn with a completely custom chrome?

If we just try setting our Window.Background=”Transparent” nothing happens again, but that is primarily because we haven’t turned on Window.AllowsTransparency=”true” as well, and unfortunately running the code at this point results in an error telling us the only acceptable WindowStyle is WindowStyle=”None”.

<Window x:Class="VS2012OuterGlowArticle.MainWindow" xmlns=http://schemas.microsoft.com/winfx/2006/xaml/presentation xmlns:x=http://schemas.microsoft.com/winfx/2006/xaml Title="MainWindow" Height="100" Width="200" AllowsTransparency="True" WindowStyle="None" Background="Transparent"> <Border BorderBrush="DarkOrange" BorderThickness="1" Background="White" Margin="5"> <Border.Effect> <DropShadowEffect ShadowDepth="0" BlurRadius="5" Color="DarkOrange"/> </Border.Effect> </Border> </Window>

As you can see we now have our glow but lack everything else. However I think this once again helps us as it frees us from the constraints of the OS look and lets us do whatever else we want to. You can get most of your functionality back by integrating the Microsoft.Windows.Shell library. This is a superset of System.Windows.Shell and includes handling extending glass which we can subvert to provide such things as window drag and resize borders. Normally I have all of this in a style, and so the relevant part looks like this.

<Setter Property="shell:WindowChrome.WindowChrome"> <Setter.Value> <shell:WindowChrome ResizeBorderThickness="4" CaptionHeight="24" CornerRadius="0" GlassFrameThickness="0"/> </Setter.Value> </Setter>

For now, however I’m going to simply add a close button

<Grid> <Button HorizontalAlignment="Right" VerticalAlignment="Top" Background="DarkOrange" Foreground ="White" BorderThickness="0" Click="Window_Close"> X </Button> </Grid>

Maximised windows

Even though we haven’t provide the UI to allow the user to maximise the window with the mouse, you can still use the Windows7 shortcuts, Windows+Up/Left/Right, and there is a problem. Remember that margin that we needed around our border to allow us the room for our gradient, well its still there.

If you maximise your window you also are still left with the single pixel Border all round your window, but we can quite easily deal with this. I first used a custom converter on a Binding from WindowState that produces a zero Thickness for the border when WindowState=”Maximised”.

But if you snap your window to the left or right with Windows+Left/Right you can also see it quite plainly, and this time the window hasn’t changed state, just size.

Well, I suppose I could just check on when the window size changes and just turn off the relevant borders, but unfortunately there doesn’t seem to be an easy way to find the size of the working area (ie. the bit without the the taskbar) of the screen in WPF. You can get it for the Primary screen, but not the current. Which is fine as long as you are sure nobody is ever going to run your application on multiple monitors.

In fact, some investigation of this lead me to http://stackoverflow.com/questions/1927540/how-to-get-the-size-of-the-current-screen-in-wpf and an answer by Nils http://stackoverflow.com/users/180156/nils where he wraps the WinForms assembly in a nice class called WpfScreen, and this allows us to set both the border and the BorderThickness containing the glow.

This allows me to simply determine the border and margin (where the glow appears) to be calculated like this;

if (window.WindowState == WindowState.Maximized) { //No point in glowing when you cant see the glow border.Margin = new Thickness(0); border.BorderThickness = new Thickness(0); return; } // Snapped windows are a little more awkward to detect // todo: Need to get current screen height not primary var workingArea = WpfScreen.GetScreenFrom(window).WorkingArea; if (window.Top == workingArea.Top && window.Height == workingArea.Height) { border.Margin = new Thickness( window.Left == workingArea.Left ? 0 : GLOW_BORDER_MARGIN, 0, window.Left + window.Width == workingArea.Right ? 0 : GLOW_BORDER_MARGIN, 0); border.BorderThickness = new Thickness( window.Left == workingArea.Left ? 0 : 1, 0, window.Left + window.Width == workingArea.Right ? 0 : 1, 0); return; } border.Margin = new Thickness(GLOW_BORDER_MARGIN); border.BorderThickness = new Thickness(1);

And as they say, here’s one I made earlier; notice how the top glow is missing since its snapped to the left hand side.

Reusability

Now at this point you have enough to go off and replicate this effect. Just add a Window_SizeChanged handler with the above snippet, add a border with it’s effect into the window xaml, and include Microsoft.Windows.Shell assembly for window resizing and repositioning and your done. However applications often need more than one window and there’s always the next application too, so we want to avoid copying and pasting the code around.

Normally we would just use a derived class to handle this, but the problem here is that the user may already be using a UI framework that adds some functionality, possibly even a corporate standard one that they can’t change and is now just looking just to change the look and feel. We could use a Mixin class that allows us to include the additional functionality, but then the user would still be need to add initialisation code to the code-behind in order to trigger it, which isn’t really look-less really.

Fortunately WPF provides the means with attached properties. We can define an attached property so that when we set some value in the xaml, then this triggers a registration process. Since we need to identify which border we are going to be switching on and off, then I’ve chosen to make this the value I need to set in my property, i.e.

<Border BorderThickness="1" BorderBrush="DarkOrange" WinChrome:Modern.GlowBorder="True">

...

</Border>

And finally

Since the ultimate form of re-usability these days seems to come from open-sourcing ones code, I’m dropping all of this into winchrome.codeplex.com.

Bad developers plagiarise, Good developers steal

I’ve been reading the conversation that Scott Hanselman kicked off about Good developers vs Google developers and I have to say that my own feelings are pretty much summed up by those of Rick Strahl.

Except for one point.

Like many of these issues there is no black and white, but there is a lot of grey. Rick et al., have pointed out the advantages of using a reference library, such as the meta-library provided to us by the internet, but my point is not only that its desirable, but actually necessary to be a good developer.

Without the reference to see many examples, how can one determine what makes code better or us?

Without somebody proposing good design, how do we identify bad?

In short how so we avoid being an isolationist set of teams, with many local maxims, instead of a cohesive profession where we can all benefit.

And with that though in mind I’m really glad I went to tonight’s LDNUG.

Using SpecFlow isn’t necessarily a good practice

There’s been a batch of questions on StackOverflow recently on the SpecFlow questions feed that follow a similar pattern.

- Specflow – How to move back to a previos step

- How to run gherkin scenario multiple times

- How to pass Command line argument to specflow test scenario

- How to manage global variables in Spec Flow

All these questions have one thing in common, they are definitely not using BDD. I’m not sure what they are doing, in one case, SpecFlow is used to set up a test run and gather performance data, but there is never a comparison or an assertion. I’d struggle to even call it testing as the test must be performed manually by looking at the data.

But all this is a micro level abstraction of a bigger problem, which it looks like Liz Keogh is feeling at the moment. Her latest post Behaviour Driven Development – Shallow and Deep definitely indicates that this phenomenon is not just isolated to technical individuals trying out new ways of doing things and getting it wrong, but that those habits seem to then pass on to the organisations that they work for.

I love the way that Liz talks about this problem in the context of having a conversation with somebody else. In fact I might just go for a little chat myself.

The Deployment maturity model

A long time ago I came across the Personal Threading Maturity Model and I still keep referring back to it as a measure of how much further I have to go. Today however, it struck me that I probably could do with some other yardsticks to show how far off nirvana some of things I’m doing really are. With that in mind I present the Deployment Maturity Model, my take on how things often work, how I can see them working if I really push, and how things work in organisations that get it.

Unaware

Uses manual deployment strategy such as xcopy/ad-hoc SQL to change what they want where they want. Usually the production deployment is a bespoke process that is never practiced in advance in other environments. This unfortunately makes them time consuming and error prone.

Authorisation/Sign off and security are totally incidental. Sometimes they are considered, but only the personal high standards of good developer practices prevents them from being abused.

Post-release testing is achieved by manually using the production system and confirming the new functionality. Rollback is achieved by taking a backup copy at the start of the release, and/or providing SQL rollback scripts.

Casual

Introduction of packages, such as MSIs, that ensures that some components of a deployment can be upgraded as a single unit, such as application + shared dlls. However for multi-tier applications the other tiers are commonly tightly coupled with these changes, e.g. database updates, so they require downtime and synchronization between tiers. Packages can be deployed in Dev, test and production, but often require additional manual interaction such as switching back end tier or service connections and changes to database.

Authorisation to release is always considered at this level with external RFC/Change management systems being the norm, but security/the ability to release to production is still often in the hands of the developer. Post-release testing is still manual. Rollback can now be achieved in part by redeploying the previous version packages, although a common problem is that ad-hoc changes have been made to configuration files, which need to be re-applied or manually backed up before the release occurs.

Rigid

Begin use of deployment management software. Software ensures that all components of a release are released at the same time. Releases happen much faster and more reliably as process is automated and consistent process is used across development, test and production environments. Configuration should be a product of the deployment process, so values are injected during release to associate tiers/services together. All ad-hoc production changes should be banned.

Security is now locked down, with no write-access to production for developers being the norm. Tools however are still not joined up between tracking the changes included e.g. Jira, gathering authorisation e.g. Remedy and performing the release e.g. OctopusDeploy. Down time is reduced as releases can be staggered within tiers. Strategies such as load balancing used to keep application constantly available while individual services are rotated out of service for upgrade.

Rollback is by re-releasing the previous version. Testing should also change to use automated test packs that can be run as soon as new environments are available primarily in test environments, but also with shakedown packs that can be run in production post release.

Flexible

Additional opportunities to utilise infrastructure are employed due to the ease at which deployments can now be achieved. Opportunities to create multiple instances of a tier or service are used, so that current and previous versions are always available, e.g. direct certain clients to new front end, or point certain servers to new back end services.

Applications and services are written to support switch on/off new features for subsets of usage e.g. per client, or redirect to alternate service instances, i.e. switch on of new version is runtime application setting change, not a deployment.

Deployment tools now starts to link up, and orchestration of how/when to perform the release becoming an additional feature. Some systems may even allow you to define the future state of your environment, and let the software work out which pieces need releasing.

Release testing can now be performed in production with pilot subsets in advance of migrating true production processing on to it, and releases then staggered by moving subsets over at a time. Rollback is now trivial by using instance of service that is still on previous version and which remains live until later decommissioning.

Optimising

Support for change of services and tiers embraced across entire architecture. Infrastructure fully utilised to support multiple versions of service instances and ensure balanced load. Since release process is proven and trivial, continuous deployment should be used to automatically deploy every candidate release that meets quality gates to production so that change is incremental. Load-balancing used heavily so that release downtime is zero, and impact from release, e.g. load performance, is negligible.

Deployment tools need to be completely joined up or face irrelevance, e.g Authorisation to release simply becomes one of the quality gates leading to automated release, and with a good testing framework, could be automatically given if the criteria for acceptance can be defined and verified electronically.

Rollback happens automatically should issues be detected. Roll forwards is as trivial as it can be.

Some links

If you read only one link, start with this one http://timothyfitz.com/2009/02/10/continuous-deployment-at-imvu-doing-the-impossible-fifty-times-a-day/

Using NuGet 2.5 to deliver unmanaged dlls

A little while ago I looked at a way to deliver unmamaged dlls via NuGet 2.0. Well now there’s NuGet 2.5 and they’ve only gone and fixed all the problems.

The two problems unsolved from before were

- How to package the set of dlls

- How to inject our new build target into msbuild so that it copies over our dlls

Introducing native dlls

The first new feature in 2.5 is Supporting Native projects. Its quite simple, underneath you lib folder you can now add a new type native. The first thing you will notice when you try this out, is that you can’t just ship a set of lib\native files, you need something else.

Could not install package ‘MyPackage 1.0.1’. You are trying to install this package into a project that targets ‘.NETFramework,Version=v4.5’, but the package does not contain any assembly references or content files that are compatible with that framework. For more information, contact the package author.

You can simply add a content folder with a readMe.txt or similar, to get around this issue.

Installing a package built like this reveals that although the files end up copied into the packages\myPackage.vx.x.x\lib\native folder, nothing happens to them when you run a build.

Automatically including .props and .targets

The 2nd new feature is Automatic import of msbuild targets and props files. In short if you add a .props file in the Build folder of your package, it gets added to the start your .csproj, and if you add a .targets it gets added to the end. This is where I refer back to my earlier post and we can now copy over that little script.

<?xml version="1.0" encoding="utf-8"?>

<Project ToolsVersion="4.0" xmlns="http://schemas.microsoft.com/developer/msbuild/2003">

<Target Name="AfterBuild">

<ItemGroup>

<MyPackageSourceFiles Include="$(MSBuildProjectDirectory)\..\Packages\FakePackage\*.*"/>

</ItemGroup>

<Copy SourceFiles="@(MyPackageSourceFiles)"

DestinationFolder="$(OutputPath)" >

</Copy>

</Target>

</Project>

Using NuGet to supply non managed dlls

NuGet is great for supplying managed dlls, but refuses to allow you to add references to unmanaged. Yet it would still be the perfect format for shipping them.

There are already some NuGet packages out there that do clever things, one of my favourites is OctoPack from OctopusDeploy. Instead of just adding some dlls, this NuGet package modifies your .csproj so that if you are doing a release build then it will call extra commands to package up your build artefacts into a deployable package. Now this gives me an idea.

First, the test

First of all we’ll start with a very simple program, which will tell is if our wonderful external dll has been made available at run time or not.

class Program

{

static void Main(string[] args)

{

const string FakeFile = "fake.externalDll";

var exists = File.Exists(FakeFile);

var output = string.Format("{0} {1} found in {2}", FakeFile, exists ? "is" : "isn't", Environment.CurrentDirectory);

Console.WriteLine(output);

Console.ReadKey();

}

}

Basically if we F5 the solution, we will now get told if we have successfully hacked something together.

Iteration 1

Now to start with, I’m not going to have a finished product. So I’m going to start by manually changing my .csproj. Later iterations will script this.

If you’ve ever unloaded and edited a .csproj then you’ve probably already seen the commented out section at the bottom,

<!-- To modify your build process, add your task inside one of the targets below and uncomment it.

Other similar extension points exist, see Microsoft.Common.targets.

<Target Name="BeforeBuild">

</Target>

<Target Name="AfterBuild">

</Target>-->

After a little judicious digging into MSBuild we find that this is a placeholder for some <Task> definitions so we can add the equivalen tof a post build step

<Target Name="AfterBuild">

<ItemGroup>

<MyPackageSourceFiles Include="$(MSBuildProjectDirectory)\..\Packages\FakePackage\*.*"/>

</ItemGroup>

<Copy SourceFiles="@(MyPackageSourceFiles)"

DestinationFolder="$(OutputPath)" >

</Copy>

</Target>

And the resulting output

fake.externalDll is found in c:\users\al\documents\visual studio 2012\Projects\DllReferencingDemo\DllReferencingDemo\bin\Debug

Reactive UI: Doing It Better

I thought I had the hang of ReactiveUI, but when I got told I was Doing It WrongTM I took the opportunity to reconsider how I might start Doing It Better.

Never write anything in the setter in ReactiveUI other than RaiseAndSetIfChanged – if you are, you’re definitely Doing It Wrong™ – Paul Betts

With that in mind I wondered how how I might acheive that. Firstly its going to make all my properties much simpler, in fact my initial thought was how to wire up a ViewModel property back to its model property if I can’t use the property. In the past I would have previously used

// This is wrong

public bool IsSet

{

get { return _model.IsSet; }

set

{

this.RaisePropertyChanging(x=>x.IsSet);

_model.IsSet = value;

this.RaisePropertyChanged(x=>x.IsSet;

}

}

However that’s not very observable. So if instead we stick with the idea of making the property only do the notification, then we need something to update the model property on the view model change. Well that sounds just like an observable to me.

public bool IsSet

{

get { return _IsSet; }

set { this.RaiseAndSetIfChanged(x => x.IsSet, value); }

}

and in the constructor

this.ObservableForProperty(x=>x.IsSet).Subscribe(x=>_model.IsSet);

Now we have a building block that enables us to build up the complicated functionality that we need.

7 years of good development in 5 minutes

First, here’s the idea of TDD

http://www.jamesshore.com/Blog/Red-Green-Refactor.html

Now here’s the concept of strict TDD, this example uses NUnit which is “A Good Thing”.

http://gojko.net/2009/02/27/thought-provoking-tdd-exercise-at-the-software-craftsmanship-conference/

Now you need something to try it out on, so here’s a kata

http://www.butunclebob.com/ArticleS.UncleBob.TheBowlingGameKata (you want to click on the word “Here” to get the slides)

So here’s an exercise,

1. spend 20 minutes using the kata to write some code – literally set a timer. Don’t worry if you don’t get very far

2. Delete your code

3. Repeat every day for a week, compare your progress. (If you are keen repeat more often, but it needs a whole week to distil)

Watch this presentation

http://gojko.net/2011/02/04/tdd-breaking-the-mould/

Still happy with what you did?

Now the point of the kata is that it removed the business analysis element from your coding to turn it into an exercise, which isn’t very real world so…

Introducing BDD

http://dannorth.net/introducing-bdd/

Download SpecFlow http://www.specflow.org/

Try the kata again, but this time in BDD, again try it out for a week or so…

Remember this slide from the presentation, well the outer circle implies Business level tests (think SpecFlow), the inner circle implies technical level tests (think something simpler maybe NUnit). Again try it out for a while

And finally, talk to me about your experiences…

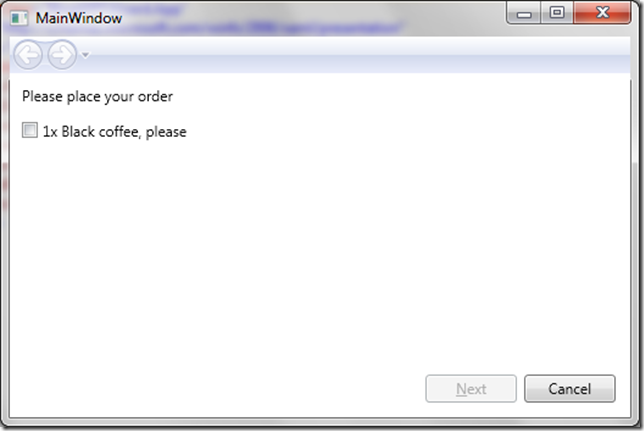

WPF Wizards part 2 – Glass

See also WPF Wizards – Part 1

The big difference between the wizard from part 1 and how a system wizard looks, for me, is that the glass header doesn’t extend down. Fortunately there are lots of tutorials that can help with the mechanics of how it works so I’m going to simply present my own solution, which demonstrates some useful WPF tactics.

Defining a Style

Since you will obviously want to reuse this code, I’m going to define a style, we start with something pretty simple.

<Style x:Key="Win7Wizard" TargetType="{x:Type NavigationWindow}"> <Setter Property="MinWidth" Value="400"/>

… </Style>

I’m going to use Attached Properties to avoid any need to derive a GlassWindow class from Window. Since we are using a NavigationWindow it really wouldn’t make sense. There’s two parts you normally set, first a bool to turn it on and then you define how far it extends into the normal area. We simply need to extend down from the top, so we use this

<Setter Property="glass:GlassEffect.IsEnabled" Value="True" />

<Setter Property="glass:GlassEffect.Thickness"> <Setter.Value> <Thickness Top="35"/> </Setter.Value> </Setter>

Implementing the properties

We can implement the attached properties just like this, notice the extra handler definition for when IsEnabled is modified;

public static readonly DependencyProperty IsEnabledProperty = DependencyProperty.RegisterAttached("IsEnabled", typeof(Boolean), typeof(GlassEffect), new FrameworkPropertyMetadata(OnIsEnabledChanged));

The actual backing values are applied in the usual manner for Dependency Properties

[DebuggerStepThrough] public static void SetIsEnabled(DependencyObject element, Boolean value) { element.SetValue(IsEnabledProperty, value); } [DebuggerStepThrough] public static Boolean GetIsEnabled(DependencyObject element) { return (Boolean)element.GetValue(IsEnabledProperty); }

And not forgetting the extra handler for IsEnabled which prevents Windows 7 losing the glass.

[DebuggerStepThrough] public static void OnIsEnabledChanged(DependencyObject obj,

DependencyPropertyChangedEventArgs args) { if ((bool)args.NewValue == true) { try { Window wnd = (Window)obj; wnd.Activated += new EventHandler(wnd_Activated); wnd.Loaded += new RoutedEventHandler(wnd_Loaded); wnd.Deactivated += new EventHandler(wnd_Deactivated); } catch (Exception) { //Oh well, we tried } } else { try { Window wnd = (Window)obj; wnd.Activated -= new EventHandler(wnd_Activated); wnd.Loaded -= new RoutedEventHandler(wnd_Loaded); wnd.Deactivated -= new EventHandler(wnd_Deactivated); } catch (Exception) { } } }

Those handlers are all very similar but I’ve chosen to implement separately for simplicities sake

[DebuggerStepThrough] static void wnd_Deactivated(object sender, EventArgs e) { ApplyGlass((Window)sender); } [DebuggerStepThrough] static void wnd_Activated(object sender, EventArgs e) { ApplyGlass((Window)sender); } [DebuggerStepThrough] static void wnd_Loaded(object sender, RoutedEventArgs e) { ApplyGlass((Window)sender); }

Making glass

The code to actually turn on the glass is slightly more involved. Although this is based on lots of other web based examples, note the use of DPI calculation so it still works correctly as soon as you move to 125% DPI Windows installation. I’ve also got another property which defines the colour that should be painted in the glass sections to correctly match the window when we are on XP or if glass isn’t turned on in Vista/7 so that we handle the switch between active and inactive windows.

private static void ApplyGlass(Window window) { try { // Obtain the window handle for WPF application IntPtr mainWindowPtr = new WindowInteropHelper(window).Handle; HwndSource mainWindowSrc = HwndSource.FromHwnd(mainWindowPtr); // Get System Dpi System.Drawing.Graphics desktop = System.Drawing.Graphics.FromHwnd(mainWindowPtr); float DesktopDpiX = desktop.DpiX; float DesktopDpiY = desktop.DpiY; // Set Margins GlassEffect.MARGINS margins = new GlassEffect.MARGINS(); Thickness thickness = GetThickness(window);//new Thickness(); // Extend glass frame into client area // Note that the default desktop Dpi is 96dpi. The margins are // adjusted for the system Dpi. margins.cxLeftWidth = Convert.ToInt32((thickness.Left*DesktopDpiX/96)+0.5); margins.cxRightWidth = Convert.ToInt32((thickness.Right*DesktopDpiX/96)+0.5); margins.cyTopHeight = Convert.ToInt32((thickness.Top*DesktopDpiX/96)+0.5); margins.cyBottomHeight = Convert.ToInt32((thickness.Bottom*DesktopDpiX/96)+0.5); int hr = GlassEffect.DwmExtendFrameIntoClientArea(

mainWindowSrc.Handle,

ref margins); if (hr < 0) { //DwmExtendFrameIntoClientArea Failed if(window.IsActive) SetGlassBackground(window, SystemColors.GradientActiveCaptionBrush); else SetGlassBackground(window, SystemColors.GradientInactiveCaptionBrush); } else { mainWindowSrc.CompositionTarget.BackgroundColor = Color.FromArgb(0, 0, 0, 0); SetGlassBackground(window, Brushes.Transparent); } } // If not Vista, paint background as control. catch (DllNotFoundException) { SetGlassBackground(window, SystemColors.ControlBrush); } }

The only downside of this code is that we rely on the System.Drawing dll which isn’t referenced by default for WPF. You simply have to remember to add the reference or else you will get.

The type or namespace name 'Drawing' does not exist in the

namespace 'System' (are you missing an assembly reference?)

Putting it all together

The final step is simply to turn on the glass by applying the style to the window, first since I’ve defined the style in a ResourceDictionary, you can add that once to your App.xaml by adding the highlighted bit below.

<Application x:Class="BlogWPFWizard.App" xmlns="http://schemas.microsoft.com/winfx/2006/xaml/presentation" xmlns:x="http://schemas.microsoft.com/winfx/2006/xaml" StartupUri="MainWindow.xaml"> <Application.Resources> <ResourceDictionary> <ResourceDictionary.MergedDictionaries> <ResourceDictionary Source="Style\Win7wizard.xaml" /> </ResourceDictionary.MergedDictionaries> </ResourceDictionary> </Application.Resources> </Application>

And then we apply the style to the Window

<NavigationWindow x:Class="BlogWPFWizard.MainWindow" xmlns="http://schemas.microsoft.com/winfx/2006/xaml/presentation" xmlns:x="http://schemas.microsoft.com/winfx/2006/xaml" Title="MainWindow" Height="350" Width="525" Style="{StaticResource Win7Wizard}" > </NavigationWindow>

And there we have the glass, the only problem is you can’t see it, because the Navigation window is in the way. If you look really closely you can see the missing black line on either side of the Navigation header.

Building the glass layout

One quick and dirty way round this is to start to quickly define an empty template for the Navigation Window. I’m going to define a section for the top, which we will bind the BackGround colour as generated above, and also define a ContentPresenter so we can inject the pages into.

<Setter Property="Template"> <Setter.Value> <ControlTemplate> <DockPanel x:Name="mainDock" LastChildFill="True" > <!-- The border is used to compute the rendered height with margins. topBar contents will be displayed on the extended glass frame.—> <Border DockPanel.Dock="Top"

Background="{TemplateBinding glass:GlassEffect.GlassBackground}"

x:Name="glassPanel" Height="36"> <!-- Wizard controls will go here--> </Border> <Border BorderThickness="{TemplateBinding Border.BorderThickness}" BorderBrush="{TemplateBinding Border.BorderBrush}" Background="{TemplateBinding Panel.Background}"> <!-- Content area ie where Pages go --> <AdornerDecorator > <ContentPresenter Content="{TemplateBinding ContentControl.Content}" ContentTemplate="{TemplateBinding ContentControl.ContentTemplate}" ContentStringFormat="{TemplateBinding

ContentControl.ContentStringFormat}" Name="PART_NavWinCP" ClipToBounds="True" /> </AdornerDecorator> </Border> </DockPanel> </ControlTemplate> </Setter.Value> </Setter>

Running this proves our glass integration has worked, but also removes the chrome that makes the wizard work.

It does leave a perfect starting point for the next post.

You must be logged in to post a comment.